Google’s trying to make waves with Gemini, its flagship suite of generative AI models, apps and services.

So what’s Google Gemini, exactly? How can you use it? And how does Gemini stack up to the competition?

To make it easier to keep up with the latest Gemini developments, we’ve put together this handy guide, which we’ll keep updated as new Gemini models, features and news about Google’s plans for Gemini are released.

What is Gemini?

Gemini is Google’s long-promised, next-gen generative AI model family, developed by Google’s AI research labs DeepMind and Google Research. It comes in four flavors:

- Gemini Ultra, the most performant Gemini model.

- Gemini Pro, a lightweight alternative to Ultra.

- Gemini Flash, a speedier, “distilled” version of Pro.

- Gemini Nano, two small models — Nano-1 and the more capable Nano-2 — meant to run offline on mobile devices.

All Gemini models were trained to be natively multimodal — in other words, able to work with and analyze more than just text. Google says that they were pre-trained and fine-tuned on a variety of public, proprietary and licensed audio, images and videos, a large set of codebases and text in different languages.

This sets Gemini apart from models such as Google’s own LaMDA, which was trained exclusively on text data. LaMDA can’t understand or generate anything beyond text (e.g., essays, email drafts), but that isn’t necessarily the case with Gemini models.

We’ll note here that the ethics and legality of training models on public data, in some cases without the data owners’ knowledge or consent, are murky indeed. Google has an AI indemnification policy to shield certain Google Cloud customers from lawsuits should they face them, but this policy contains carve-outs. Proceed with caution, particularly if you’re intending on using Gemini commercially.

What’s the difference between the Gemini apps and Gemini models?

Google, proving once again that it lacks a knack for branding, didn’t make it clear from the outset that Gemini is separate and distinct from the Gemini apps on the web and mobile (formerly Bard).

The Gemini apps are clients that connect to various Gemini models — Gemini Ultra (with Gemini Advanced, see below) and Gemini Pro so far — and layer chatbot-like interfaces on top. Think of them as front ends for Google’s generative AI, analogous to OpenAI’s ChatGPT and Anthropic’s Claude family of apps.

Gemini on the web lives here. On Android, the Gemini app replaces the existing Google Assistant app. And on iOS, the Google and Google Search apps serve as that platform’s Gemini clients.

Gemini apps can accept images as well as voice commands and text — including files like PDFs and soon videos, either uploaded or imported from Google Drive — and generate images. As you’d expect, conversations with Gemini apps on mobile carry over to Gemini on the web and vice versa if you’re signed in to the same Google Account in both places.

Gemini in Gmail, Docs, Chrome, dev tools and more

The Gemini apps aren’t the only means of recruiting Gemini models’ assistance with tasks. Slowly but surely, Gemini-imbued features are making their way into staple Google apps and services like Gmail and Google Docs.

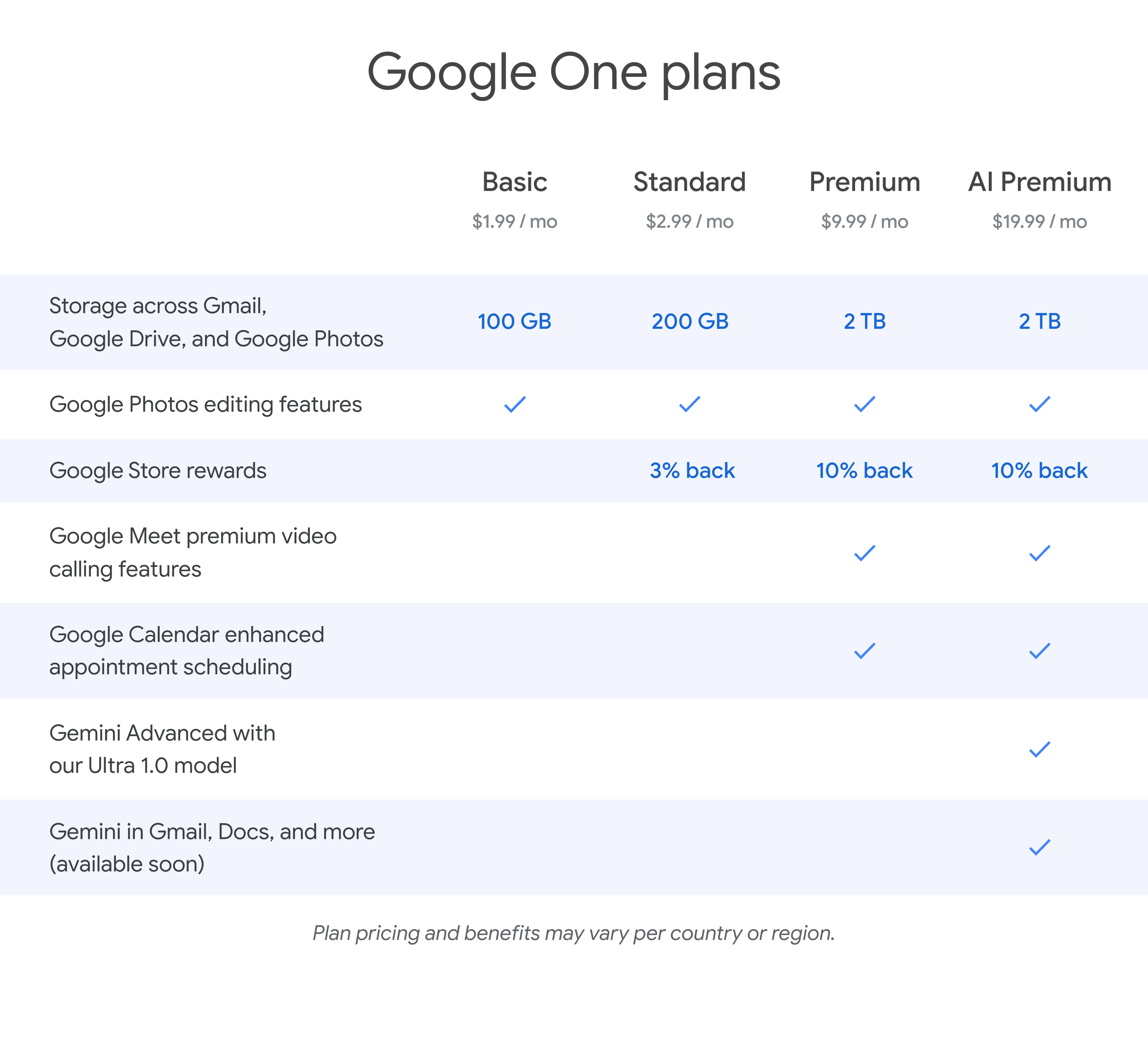

To take advantage of most of these, you’ll need the Google One AI Premium Plan. Technically a part of Google One, the AI Premium Plan costs $20 and provides access to Gemini in Google Workspace apps like Docs, Slides, Sheets and Meet. It also enables what Google calls Gemini Advanced, which brings Gemini Ultra to the Gemini apps plus support for analyzing and answering questions about uploaded files.

Gemini Advanced users get extras here and there, also, like trip planning in Google Search, which creates custom travel itineraries from prompts. Taking into account things like flight times (from emails in a user’s Gmail inbox), meal preferences and information about local attractions (from Google Search and Maps data), as well as the distances between those attractions, Gemini will generate an itinerary that updates automatically to reflect any changes.

In Gmail, Gemini lives in a side panel that can write emails and summarize message threads. You’ll find the same panel in Docs, where it helps you write and refine your content and brainstorm new ideas. Gemini in Slides generates slides and custom images. And Gemini in Google Sheets tracks and organizes data, creating tables and formulas.

Gemini’s reach extends to Drive, as well, where it can summarize files and give quick facts about a project. In Meet, meanwhile, Gemini translates captions into additional languages.

Gemini recently came to Google’s Chrome browser in the form of an AI writing tool. You can use it to write something completely new or rewrite existing text; Google says it’ll take into account the webpage you’re on to make recommendations.

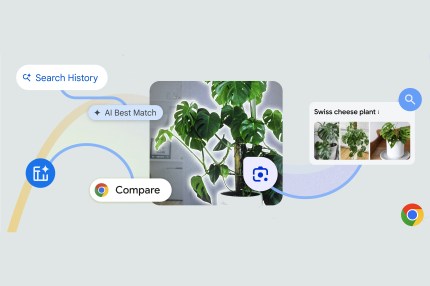

Elsewhere, you’ll find hints of Gemini in Google’s database products, cloud security tools, app development platforms (including Firebase and Project IDX), not to mention apps like Google TV (where Gemini generates descriptions for movies and TV shows), Google Photos (where it handles natural language search queries) and the NotebookLM note-taking assistant.

Code Assist (formerly Duet AI for Developers), Google’s suite of AI-powered assistance tools for code completion and generation, is offloading heavy computational lifting to Gemini. So are Google’s security products underpinned by Gemini, like Gemini in Threat Intelligence, which can analyze large portions of potentially malicious code and let users perform natural language searches for ongoing threats or indicators of compromise.

Gemini Gems custom chatbots

Announced at Google I/O 2024, Gemini Advanced users will be able to create Gems, custom chatbots powered by Gemini models, in the future. Gems can be generated from natural language descriptions — for example, “You’re my running coach. Give me a daily running plan” — and shared with others or kept private.

Eventually, Gems will be able to tap an expanded set of integrations with Google services, including Google Calendar, Tasks, Keep and YouTube Music, to complete various tasks.

Gemini Live in-depth voice chats

A new experience called Gemini Live, exclusive to Gemini Advanced subscribers, will arrive soon on the Gemini apps on mobile, letting users have “in-depth” voice chats with Gemini.

With Gemini Live enabled, users will be able to interrupt Gemini while the chatbot’s speaking to ask clarifying questions, and it’ll adapt to their speech patterns in real time. And Gemini will be able to see and respond to users’ surroundings, either via photos or video captured by their smartphones’ cameras.

Live is also designed to serve as a virtual coach of sorts, helping users rehearse for events, brainstorm ideas and so on. For instance, Live can suggest which skills to highlight in an upcoming job or internship interview, and it can give public speaking advice.

What can the Gemini models do?

Because Gemini models are multimodal, they can perform a range of multimodal tasks, from transcribing speech to captioning images and videos in real time. Many of these capabilities have reached the product stage (as alluded to in the previous section), and Google is promising much more in the not-too-distant future.

Of course, it’s a bit hard to take the company at its word.

Google seriously underdelivered with the original Bard launch. More recently, it ruffled feathers with a video purporting to show Gemini’s capabilities that was more or less aspirational, not live, and with an image generation feature that turned out to be offensively inaccurate.

Also, Google offers no fix for some of the underlying problems with generative AI tech today, like its encoded biases and tendency to make things up (i.e. hallucinate). Neither do its rivals, but it’s something to keep in mind when considering using or paying for Gemini.

Assuming for the purposes of this article that Google is being truthful with its recent claims, here’s what the different tiers of Gemini can do now and what they’ll be able to do once they reach their full potential:

What you can do with Gemini Ultra

Google says that Gemini Ultra — thanks to its multimodality — can be used to help with things like physics homework, solving problems step-by-step on a worksheet and pointing out possible mistakes in already filled-in answers.

Ultra can also be applied to tasks such as identifying scientific papers relevant to a problem, Google says. The model could extract information from several papers, for instance, and update a chart from one by generating the formulas necessary to re-create the chart with more timely data.

Gemini Ultra technically supports image generation. But that capability hasn’t made its way into the productized version of the model yet — perhaps because the mechanism is more complex than how apps such as ChatGPT generate images. Rather than feed prompts to an image generator (like DALL-E 3, in ChatGPT’s case), Gemini outputs images “natively,” without an intermediary step.

Ultra is available as an API through Vertex AI, Google’s fully managed AI dev platform, and AI Studio, Google’s web-based tool for app and platform developers. It also powers Google’s Gemini apps, but not for free. Once again, access to Ultra through any Gemini app requires subscribing to the AI Premium Plan.

Gemini Pro’s capabilities

Google says that Gemini Pro is an improvement over LaMDA in its reasoning, planning and understanding capabilities. The latest version, Gemini 1.5 Pro, exceeds even Ultra’s performance in some areas, Google claims.

Gemini 1.5 Pro is improved in a number of areas compared with its predecessor, Gemini 1.0 Pro, perhaps most obviously in the amount of data that it can process. Gemini 1.5 Pro can take in up to 1.4 million words, two hours of video or 22 hours of audio, and reason across or answer questions about all that data.

1.5 Pro became generally available on Vertex AI and AI Studio in June alongside a feature called code execution, which aims to reduce bugs in code that the model generates by iteratively refining that code over several steps. (Code execution also supports Gemini Flash.)

Within Vertex AI, developers can customize Gemini Pro to specific contexts and use cases via a fine-tuning or “grounding” process. For example, Pro (along with other Gemini models) can be instructed to use data from third-party providers like Moody’s, Thomson Reuters, ZoomInfo and MSCI, or source information from corporate data sets or Google Search instead of its wider knowledge bank. Gemini Pro can also be connected to external, third-party APIs to perform particular actions, like automating a workflow.

AI Studio offers templates for creating structured chat prompts with Pro. Developers can control the model’s creative range and provide examples to give tone and style instructions — and also tune Pro’s safety settings.

Vertex AI Agent Builder lets people build Gemini-powered “agents” within Vertex AI. For example, a company could create an agent that analyzes previous marketing campaigns to understand a brand style, and then apply that knowledge to help generate new ideas consistent with the style.

Gemini Flash is for less demanding work

For less demanding applications, there’s Gemini Flash. The newest version is 1.5 Flash.

An offshoot of Gemini Pro that’s small and efficient, built for narrow, high-frequency generative AI workloads, Flash is multimodal like Gemini Pro, meaning it can analyze audio, video and images as well as text (but only generate text).

Flash is particularly well-suited for tasks such as summarization, chat apps, image and video captioning and data extraction from long documents and tables, Google says. It’ll be generally available via Vertex AI and AI Studio by mid-July.

Devs using Flash and Pro can optionally leverage context caching, which lets them store large amounts of information (say, a knowledge base or database of research papers) in a cache that Gemini models can quickly and relatively cheaply access. Context caching is an additional fee on top of other Gemini model usage fees, however.

Gemini Nano can run on your phone

Gemini Nano is a much smaller version of the Gemini Pro and Ultra models, and it’s efficient enough to run directly on (some) phones instead of sending the task to a server somewhere. So far, Nano powers a couple of features on the Pixel 8 Pro, Pixel 8 and Samsung Galaxy S24, including Summarize in Recorder and Smart Reply in Gboard.

The Recorder app, which lets users push a button to record and transcribe audio, includes a Gemini-powered summary of recorded conversations, interviews, presentations and other audio snippets. Users get summaries even if they don’t have a signal or Wi-Fi connection — and in a nod to privacy, no data leaves their phone in the process.

Nano is also in Gboard, Google’s keyboard replacement. There, it powers a feature called Smart Reply, which helps to suggest the next thing you’ll want to say when having a conversation in a messaging app. The feature initially only works with WhatsApp but will come to more apps over time, Google says.

In the Google Messages app on supported devices, Nano drives Magic Compose, which can craft messages in styles like “excited,” “formal” and “lyrical.”

Google says that a future version of Android will tap Nano to alert users to potential scams during calls. And soon, TalkBack, Google’s accessibility service, will employ Nano to create aural descriptions of objects for low-vision and blind users.

Is Gemini better than OpenAI’s GPT-4?

Google has several times touted Gemini’s superiority on benchmarks, claiming that Gemini Ultra exceeds current state-of-the-art results on “30 of the 32 widely used academic benchmarks used in large language model research and development.” But leaving aside the question of whether benchmarks really indicate a better model, the scores Google points to appear to be only marginally better than OpenAI’s GPT-4 models.

OpenAI’s latest flagship model, GPT-4o, pulls ahead of 1.5 Pro pretty substantially on text evaluation, visual understanding and audio translation performance, meanwhile. Anthropic’s Claude 3.5 Sonnet beats them both — but perhaps not for long, given the AI industry’s breakneck pace.

How much do the Gemini models cost?

Gemini 1.0 Pro (the first version of Gemini Pro), 1.5 Pro and Flash are available through Google’s Gemini API for building apps and services, all with free options. But the free options impose usage limits and leave out some features, like context caching.

Otherwise, Gemini models are pay-as-you-go. Here’s the base pricing (not including add-ons like context caching) as of June 2024:

- Gemini 1.0 Pro: 50 cents per 1 million input tokens, $1.50 per 1 million output tokens

- Gemini 1.5 Pro: $3.05 per 1 million tokens input (for prompts up to 128,000 tokens) or $7 per 1 million tokens (for prompts longer than 128,000 tokens); $10.50 per 1 million tokens (for prompts up to 128,000 tokens) or $21.00 per 1 million tokens (for prompts longer than 128,000)

- Gemini 1.5 Flash: 35 cents per 1 million tokens (for prompts up to 128K tokens), 70 cents per 1 million tokens (for prompts longer than 128K); $1.05 per 1 million tokens (for prompts up to 128K tokens), $2.10 per 1 million tokens (for prompts longer than 128K)

Tokens are subdivided bits of raw data, like the syllables “fan,” “tas” and “tic” in the word “fantastic”; 1 million tokens is equivalent to about 700,000 words. “Input” refers to tokens fed into the model, while “output” refers to tokens that the model generates.

Ultra pricing has yet to be announced, and Nano is still in early access.

Is Gemini coming to the iPhone?

It might! Apple and Google are reportedly in talks to put Gemini to use for a number of features to be included in an upcoming iOS update later this year. Nothing’s definitive, as Apple is also said to be in talks with OpenAI and has been working on developing its own generative AI capabilities.

Following a keynote presentation at WWDC 2024, Apple SVP Craig Federighi confirmed plans to work with additional third-party models including Gemini, but didn’t divulge additional details.

This post was originally published Feb. 16, 2024 and has since been updated to include new information about Gemini and Google’s plans for it.

Comment